Testing Turnitin’s New AI Detector: How Accurate Is It?

Credit: Image Credit: Bloomberg / Getty Images

Credit: Image Credit: Bloomberg / Getty Images- Turnitin recently launched a new tool to detect language written with artificial intelligence.

- The company claims its tool is 98% accurate in detecting content created by AI.

- A test of the tool using various forms of ChatGPT-generated copy revealed remarkable accuracy.

- A year after the launch, Turnitin says more than 10% of papers include at least 20% AI writing.

Artificial intelligence, it seems, is taking over the world. At least that’s what alarmists would have you believe. The line between fact and fiction continues to blur, and recognizing what is real versus what some bot concocted grows increasingly difficult with each passing week.

This emerging AI landscape holds serious consequences for writers and other creatives whose work can be produced more efficiently, if not effectively, by sophisticated tools such as ChatGPT.

College professors face different challenges around cheating and plagiarism and student temptations. A recent study cited college faculty among the professions most “exposed to advances in AI language modeling capabilities.”

Like many others, I happen to be both a writer and a college professor. And I teach writing, which means my assignments are particularly vulnerable to AI interference.

Enter Turnitin, an industry leader in detecting “similarity” — not necessarily plagiarism — in student work. The company recently debuted its new AI detector, which promises to flag AI-produced content in submitted papers.

I thought it would be fun and instructive to take it for a test drive.

Taking Turnitin’s New AI Detector for a Spin

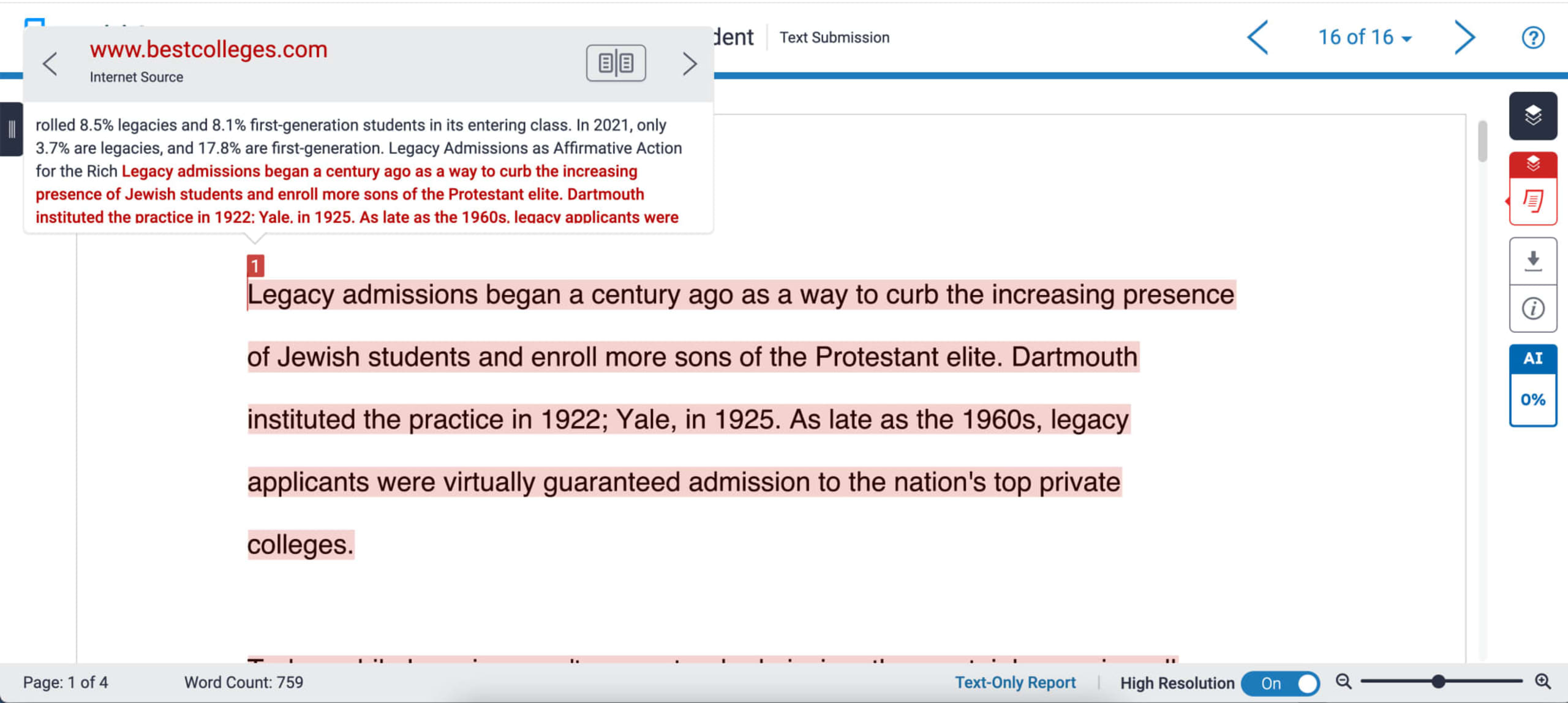

As a faculty member at Johns Hopkins University, I use Turnitin for all my writing assignments. When students submit their papers, Turnitin offers an assessment such as this:

In this case, Turnitin highlights a passage lifted from my BestColleges article on legacy admissions, showing both the copied text and the source.

But notice the blue box on the right that says “AI 0%.” That’s the new component, which tells us this assignment contains no AI-generated content.

Turnitin claims its tool is 98% accurate in detecting content created by AI.

“Even they understand there’s a 1 in 50 chance that it is human and that it’s a false positive,” Chris Mueck, an instructional technologist at Johns Hopkins, told BestColleges.

Annie Chechitelli, Turnitin’s chief product officer, offered a slightly different calculus.

“We would rather miss some AI writing than have a higher false positive rate,” she told BestColleges. “So we are estimating that we find about 85% of it. We let probably 15% go by in order to reduce our false positives to less than 1 percent.”

Chechitelli explained that the technology used in detecting AI content is entirely different than what is used to detect plagiarized copy. While the traditional Turnitin function searches for similarities among existing texts — instances of plagiarism — the new feature looks for something else.

“What we’re able to do is look at actual student writing and [determine] how often the next most probable word is used, which is not often, and then compare that to ChatGPT content to show the differences,” she said. “We use a statistical measure to say this consecutive segment and the way that it’s strung the words along looks like it’s coming from ChatGPT, whereas this one has more idiosyncrasy, it has more variety.”

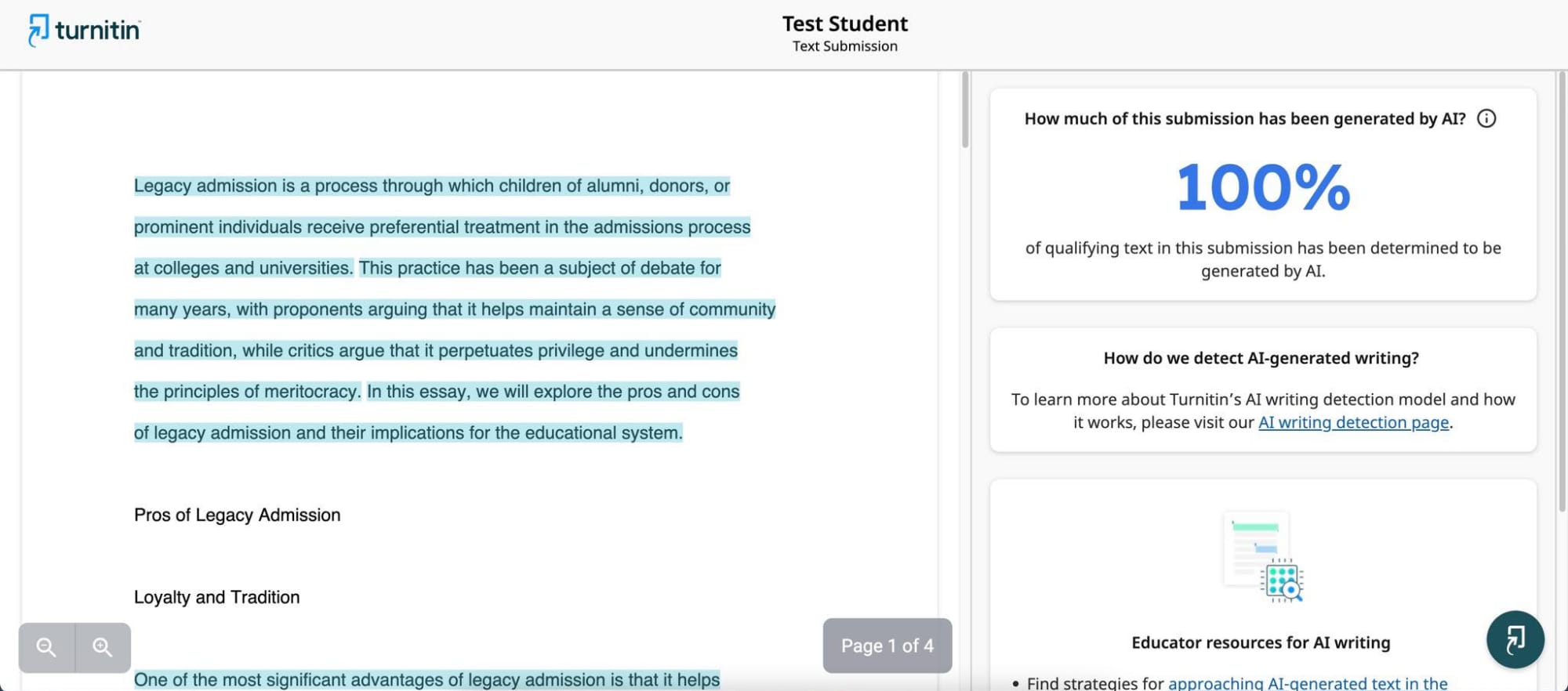

This new technology turns out to be remarkably accurate. After it correctly assigned a 0% score to the piece I wrote myself, I fed it an essay I created using ChatGPT.

It returned an assessment indicating 100% AI. Clicking on the blue box revealed this:

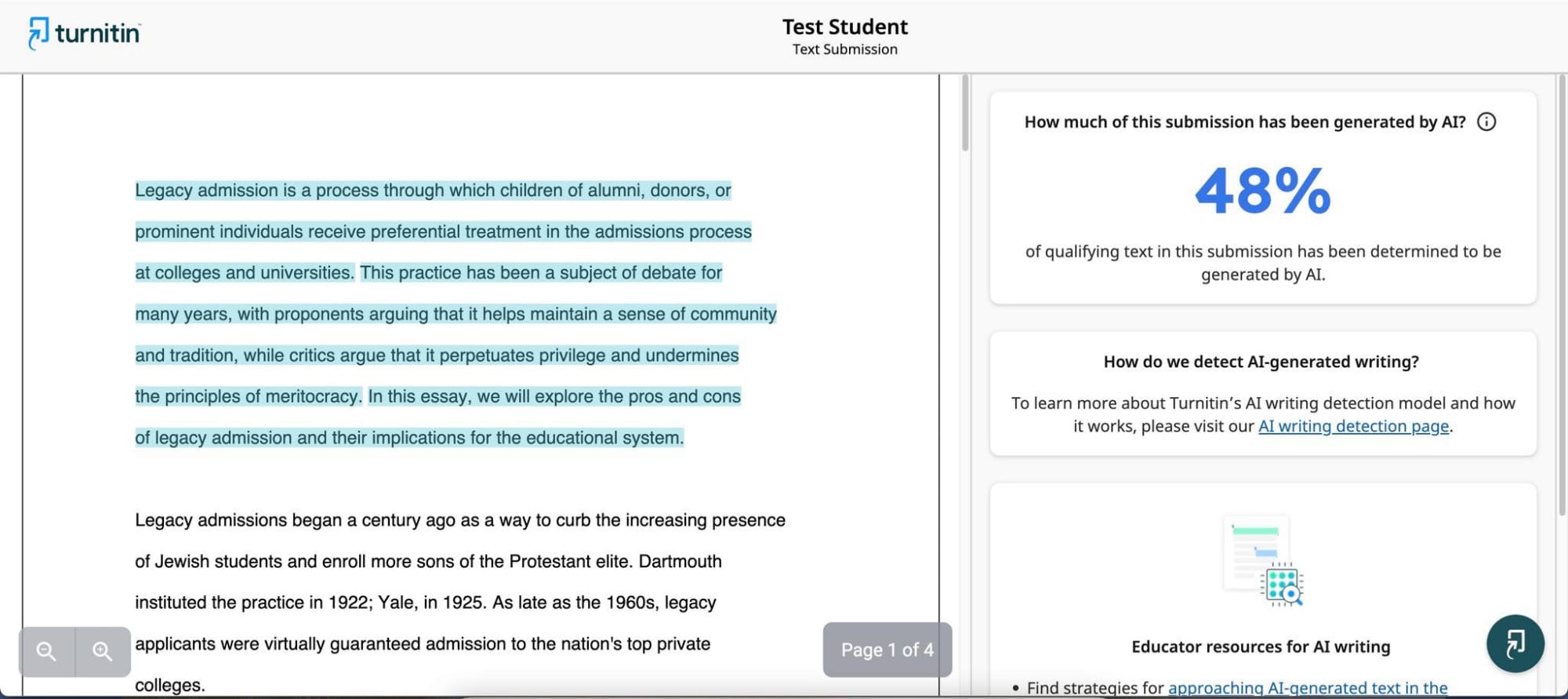

So it nailed both the 0% AI-written submission and the 100% one. How about a hybrid version? I took the ChatGPT copy and substituted what I estimated to be about 35% of my own copy. Here’s the result:

It’s not exact, but it’s sophisticated enough to recognize some portion of the paper was AI-generated, pointing out those suspect sections. Donning my professorial cap, I’d give this effort an A-. Pretty impressive for a tool trying to keep pace with technology that’s evolving at light speed.

By comparison, another site offering AI detection, GPTZero, returned a puzzling analysis. When I fed it 100% AI content, it said, “Your text is likely to be written entirely by a human,” though it highlighted sections it thought were AI-generated.

And when I submitted the hybrid version, it flagged my own passages as “more likely to be written by AI.” I take great pride in not writing like a bot.

Advantage Turnitin.

Will Turnitin Curb Student Cheating?

One big question is whether Turnitin will dissuade students from submitting content generated by ChatGPT and similar sites. A recent BestColleges survey revealed that about 1 in 5 college students (22%) admitted to using AI to complete assignments.

Similarly, a study soon to be released by Turnitin notes that 25% of students say they use AI for writing assignments every day, Chechitelli mentioned. By contrast, she said, about 70% of faculty and administrators say they’ve never used AI for their writing.

“So there’s this massive difference between where the students are and where the teachers and administrators are,” she said. “We’ve embarked on this journey, beyond just building the software, of figuring out what we have to do to close that gap.”

Across Turnitin’s users, Chechitelli said, about 10% of submitted papers contain more than 20% of AI-generated content. Within that 10%, 4% is 80-100% AI-generated.

To be fair, using ChatGPT to write a paper isn’t entirely unethical. It’s remarkably adept at ideation and creating a logical flow of information, even if some of that information has questionable validity. Submitting that output as your own constitutes cheating, but as students substitute more and more of their own thoughts and words for what the bot spit out, the gray area grows ever grayer.

“The sense of satisfaction you get from crafting a good prompt can give you that feeling of ownership of the product,” Mueck explained.

Mueck added that initial Johns Hopkins faculty feedback has been positive. Might the new technology persuade students not to cheat?

“I think that it would,” Mueck said.

As new iterations of ChatGPT evolve and similar tools appear, Turnitin will continue to modify its tool in response. The company will remain forthcoming with the public about those changes, to a point.

“We want to be very transparent,” Chechitelli said, “but we also don’t want to give students a roadmap to cheat.”

Meanwhile, I’ll be curious to see how this new tool fares in my course next fall. It’s an AI world nowadays, and we’re all just living in it.

Update: One Year Later

Since launching its AI detector a year ago, Turnitin has reviewed more than 200 million papers using this tool.

According to a recent release from the company, about 11% of those papers indicated at least 20% AI writing present, while 3% indicated more than 80%.

These figures correspond almost exactly to what Turnitin found in July 2023, only a few months after the launch. At the time, Turnitin had reviewed more than 65 million papers.

Assuming the tool is reliable, these data suggest student behavior hasn’t changed much over the past year.

At the same time, a fall 2023 study by Tyton Partners found that nearly half of college students use generative AI monthly, weekly, or daily, and that 75% of students said they’d continue using it even if their campus banned these tools.